Debra Gelman writes about designing web registration processes for 6-8 year olds in A List Apart. She shares fascinating stories and best practices. For example, many parents have trained children to never reveal anything about themselves online:

Debra Gelman writes about designing web registration processes for 6-8 year olds in A List Apart. She shares fascinating stories and best practices. For example, many parents have trained children to never reveal anything about themselves online:

“As a result, kids are wary of providing any data, even information as basic as gender and age. In fact, many kids fib about their ages online. A savvy eight-year-old girl, when prompted by the Candystand site to enter her birthdate, said, ‘I’m going to put that I’m 12. I know it’s lying, but it’s ok because I’m not allowed to tell anyone on the internet anything real about me.’…Similarly, a seven-year-old boy refused to create a Club Penguin account because it asked for a parent’s e-mail address. ‘You can’t say anything about yourself on the web. If you do, people will figure out where you live and come to your house and steal your stuff.'”

Gelman goes on to share one example of how to collect innocuous non-identifying data (e.g. grade level) without triggering children’s anxieties about sharing personal information.

She also describes the importance of using images that are “simple, clear representations of common items that are part of a child’s current context,” while trying to avoid symbolic meanings:

“It’s important to note that while pictures are useful, symbols and icons can be problematic, because, at this age kids are just learning abstract thought. While adults realize that a video camera icon means they can watch videos, kids associate the icon with actually making videos. In a recent usability test evaluating popular kids’ sites, a six-year-old girl pointed out the video camera icon and said, ‘This is cool! It means I can make a movie here and share it with my friends.’ She wasn’t able to extrapolate the real meaning of the icon based on site context and content.”

The lesson is clear: know your users.

Read the article.

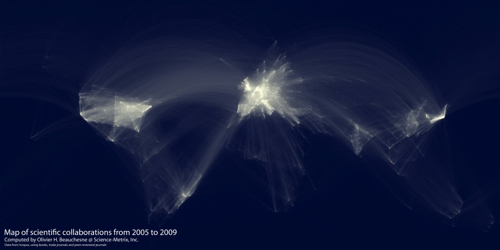

So you want to share scientific data, but what license to use? The Panton Principles have something to say:

So you want to share scientific data, but what license to use? The Panton Principles have something to say: